Learning ThroughDocumented Experiments

In-depth explorations of prompt engineering techniques, AI limitations, and real-world applications. Each case study includes interactive examples, bilingual videos, and downloadable resources.

What Are Case Studies?

Unlike traditional blog posts, these case studies are detailed experiments where I test prompt engineering techniques, document every step, analyze results critically, and share everything—including what didn't work.

Each case study may include the following and more:

- Bilingual video walkthroughs (EN & FR)

- Downloadable prompts and templates

- Before/after interactive comparisons

- Expandable deep-dive sections

- Live demos and deployed applications

- Detailed analysis and key findings

All Case Studies (5)

XML-Structured AI Agents: Optimizing for How Models Actually Work

I discovered XML structuring could optimize my 7 Context Engineers for token efficiency, minimize attention degradation, and enforce priority hierarchies. Here's the redesign.

Anthropic recommends XML tags for prompt clarity. Before implementing the 7 Context Engineers for 777-1, I restructured their definitions from prose to hierarchical XML. Here's why this matters for token efficiency, attention management, and context engineering.

Visual QA on Autopilot: Building a Self-Correcting AI Pipeline

I built a pipeline where Claude screenshots apps, finds visual bugs, and fixes them itself.

Too lazy to screenshot every UI bug? I built a workflow where Claude sees and fixes its own visual mistakes. Score improved from 63 to 77 in the first phase - then things got interesting.

Meet the Team: 7 Custom Subagents Built from 129 Code Reviews

Real names. Real personalities. Real job descriptions. Download the markdown files and use them yourself.

I analyzed 129 code reviews and extracted the 7 most common issues. Then I turned each one into a subagent with a name, a personality, and a detailed job description. Here they are.

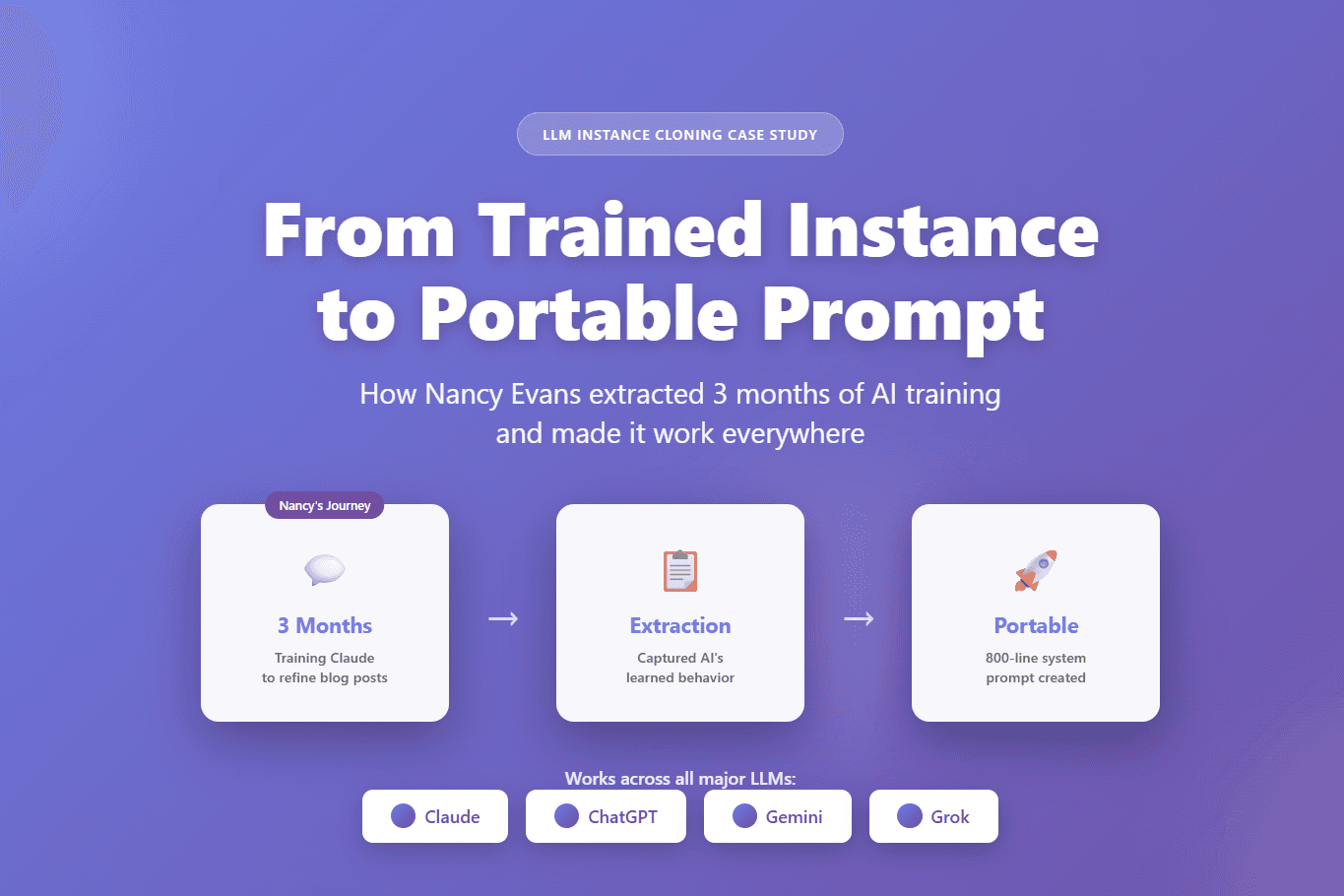

From Trained Instance to Portable Prompt: Extracting Nancy's Blog Refiner

How a financial blogger captured 3 months of AI training and made it work across Claude, ChatGPT, Gemini, and Grok

I had the perfect blog refiner. Then I lost it. Here's how I extracted my trained AI's personality using prompt extraction and made it work everywhere.

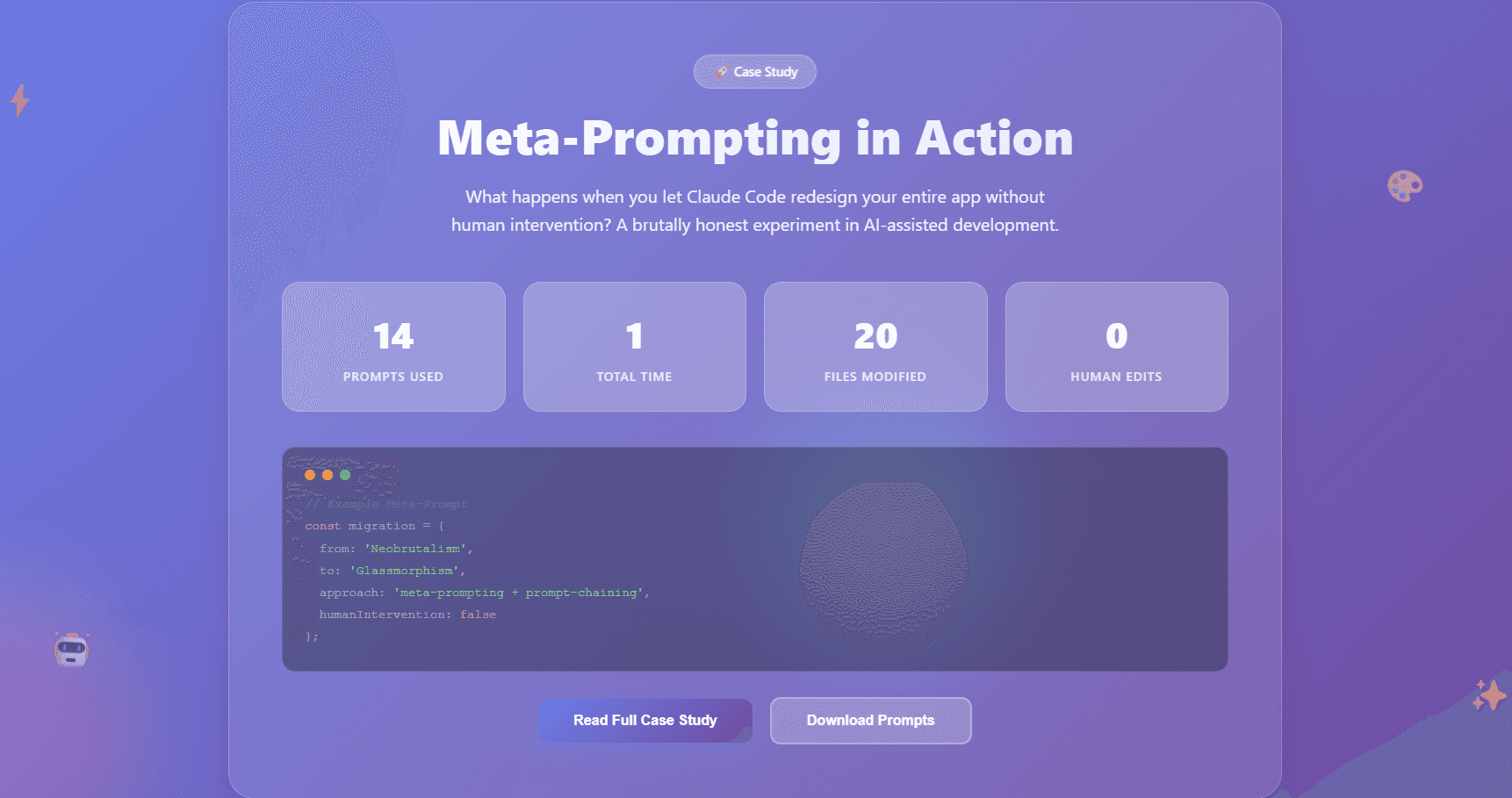

Meta-Prompting in Action: What Happens When You Let Claude Code Redesign Your App

A brutally honest experiment in trusting AI without human oversight

I asked Claude to write detailed prompts for Claude Code, then executed them without any testing or modifications. Here's what worked brilliantly—and what broke completely.